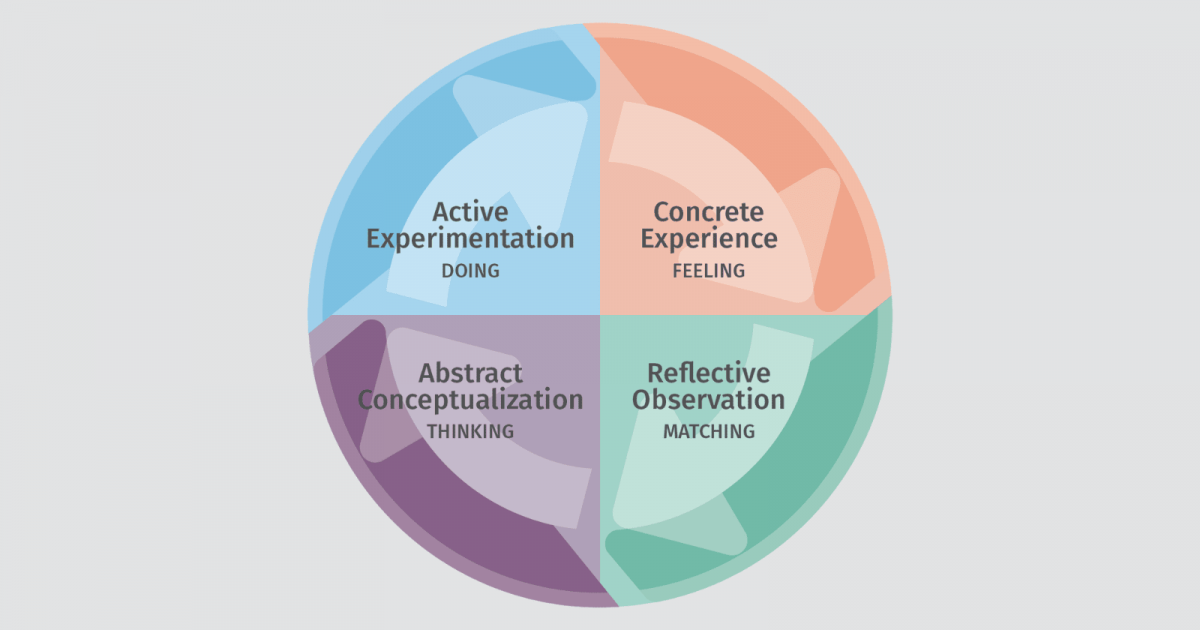

Above: an illustration of the four phases of Kolb’s Experiential Learning Cycle.

As a medical educator, I have been working with simulation for over 20 years. After many years of teaching and graduating residents into independent practice, I realized that much of what I knew about their individual competence was based on a collection of observations and assumptions. I didn’t really know whether they knew or experienced “everything in the book” prior to graduation. I call this the “assumption of competence” problem — this stems from the fact that there are too many competencies to assess using solely in-person methods, like clinical observation, manikin-based simulation, or structured clinical exams.

But with technological solutions that can track performance over time, we can finally assess the entire breadth and depth of medical competence like never before. In this article, I’d like to discuss ways that we can use technology to incorporate competency-based education into medical training, increasing our assessment of competence exponentially without adding a huge burden to our educators or learners.

To start, let me share some background on competency-based education. According to the American Association of Colleges for Nursing, competency-based education is defined as, “a system of instruction, assessment, feedback, self-reflection, and academic reporting that is based on students demonstrating that they have learned the knowledge, attitudes, motivations, self-perceptions, and skills expected of them as they progress through their education.”

Competency-based education (CBE) relies heavily on formative assessment — learning by doing and demonstrating competent performance over time until expertise is achieved. In addition to the assessment of skills in practice, it relies on experiential learning as a scaffolding to provide opportunities for practice and growth with the guidance of specific competency goals. David Kolb’s experiential learning cycle (as illustrated above) clearly maps out the concept, dividing the process into four phases: active experimentation, concrete experience, reflective observation, and abstract conceptualization. Each section of the cycle also overlaps with a different learning style. According to Armstrong & Parsa-Parsi, 59% of physicians studied prefer learning through active experimentation with newly acquired knowledge. This preference toward a hands-on learning approach perhaps explains why experiential learning through apprenticeship has been a key component of medical education for hundreds of years.

As a medical educator, my job is to provide learners with training that prepares them for clinical practice. As they gain experience, the training reinforces the complexities of concepts seen in the course of actual patient care, the so-called clinical learning environment. And so a lot of time is spent by students, residents, practicing physicians, and nurses learning by actively taking care of patients in what we call “the apprenticeship model of learning.” The apprenticeship model of clinical learning — also commonly referred to informally as a philosophy of “see one, do one, teach one” — formed the foundation of learning and competency development in medicine. In this model, achieving expertise requires a breadth of real world experience in order to gain the essential skills and knowledge to practice independently. However, since you can’t always predict what illnesses will occur in the real world, this model relies entirely on the chance of encountering specific diseases over a fixed training period (e.g. residency training). This limitation is significant — the breadth of clinical exposure is solely a function of time and serendipity. Like holes in swiss cheese, every graduate of such a program will have different knowledge and experience gaps based on the limitations of their clinical exposure. And the most common holes are in the rare conditions that are harder to recognize, diagnose, and treat.

Because of these limitations, apprenticeship learning provides spotty exposure to the range of diseases that we expect clinicians to manage competently. The integration of manikin-based simulation into training over the past 25 years has helped to resolve some of these critical deficits in clinical learning. Aligning with the steps of Kolb’s experiential learning cycle, manikin-based simulation provides invaluable opportunities for active experimentation with new concepts that can be reinforced in the clinical environment through concrete experience. Simulation-based assessments provide opportunities to directly observe and assess individual learner performance in specific core competencies as well as milestones in learning with a high degree of specificity and accuracy. However, this revolution in experiential learning and competency-based training is still very limited in several important ways. It is simply not enough.

As I discussed in a previous article, “Top 6 Problems in Medical Simulation Today,” there are significant limitations in brick and mortar simulation training. This fact limits our ability to determine competency across a wide array of disease states, rare conditions, and clinical management scenarios. Even with the addition of manikin-based simulations over the past 20 years, we are still graduating clinicians with a wide range of knowledge and clinical skills that leaves potential for medical error and inefficient care in professional practice.

Virtual simulation training, on the other hand, provides unlimited opportunities for high-frequency cognitive practice. Condensed, “low-dose” virtual simulations can compress a 30-minute mannikin session into a 5-7 minute simulation requiring the same cognitive skills and applied knowledge. Unlike most manikin-based simulations, virtual simulations provide opportunities for repetitive practice and iterative skill development, covering a broad array of diseases, freed from the constraints of physical space and scheduled time. In addition, virtual simulation motivates performance despite failure because of the privacy provided by the virtual simulation environment, allowing for more challenging cases and complex learning because there is a low cost of failure and high reward for success.

Virtual simulations offer a transformative solution for several key reasons:

- They provide a private, judgment-free experience. Ericsson calls this a “psychological moratorium of practice.” The learner is freed of fear of public failure and motivated to practice with a growth mindset.

- They promote repetitive performance consistent with the expert theory of deliberate practice. The interface can provide immediate feedback to promote the development of more complex and nuanced understanding of clinical topics.

- They leverage technology to provide unlimited opportunities for high-frequency practice required for iterative skill development, engaging learners in knowledge application and decision-making.

- They can provide access to training and re-training on rare conditions to ensure that diagnostic competence is maintained over time, independent of what is seen in the clinical environment.

At Full Code, what we’ve tried to do is put Kolb’s learning cycle on fast forward using virtual simulation. We provide users with a gameplay that is quick, distilling a 20- or 30-minute mannikin simulation into a virtual simulation taking only a fraction of that time. We provide learners with immediate feedback and the opportunity to repetitively play through the case until they achieve a certain level of performance. In a manikin-based simulation, a learner might get feedback on the four or five critical actions for that simulation case, and some other factors in terms of communication, efficacy, and other competencies. But with Full Code, you’re able to measure 30 or 40 parameters on average per case. We track data points in terms of history, physical exams, stabilizations, key interventions, diagnostic tools, consultations, and selecting the correct diagnosis.

The granularity of the competency assessments that the individual user sees, combined with the ability to privately “practice until perfect,” is much more tailored and comfortable than what you can get from a manikin-based simulation experience. We’ve also constructed the educator dashboard to break down student performance with this same degree of granularity. Educators can use those data to inform their curriculum and what they need to focus on in the classroom or at the bedside. It’s like having a dipstick to check your oil level — at any point in time, a learner or an educator can see their competency across the breadth of medicine with a high degree of certainty based on hundreds, even thousands, of clinical decisions. This is how virtual simulation can exponentially increase efficiencies in educational practice and finally solve the “assumption of competence” problem in medical education. We hope that this insight into the skills and knowledge of learners can help support an effective competency-based educational approach, and improve the quality of healthcare available globally in the future.

References:

1. Armstrong E, Parsa-Parsi R. “How Can Physicians’ Learning Styles Drive Educational Planning?” Academic Medicine 2005; 80:680–684.

2. Dreyfus S.E., Dreyfus H.L. “A five-state model of the mental activities involved in directed skill acquisition.” University of California, Berkeley, CA: Operation Research Center Report 1980.

3. Ericsson KA. “Deliberate practice and acquisition of expert performance: A general overview.” Academic Emergency Medicine 2008; 15:988-994.

4. Gee, J.P. What Video Games Have to Teach Us About Learning and Literacy. Palgrave-MacMillan: New York, NY, 2003.

5. “The ACGME Emergency Medicine Milestones in Training”; www.abem.org/public/publications/emergency-medicine-milestones. Accessed January 3, 2019.

6. Miller, G.E. “The Assessment of Clinical Skills/Competence/Performance.” Academic Medicine. 1990; 65(9):S63-67.